The AI Act, or Artificial Intelligence Regulation (EU) 2024/1689, provides a framework in which artificial intelligence technologies can be regulated. It was created to support innovation. However, it also addresses possible risks to people’s health, safety or fundamental rights.

It contains requirements that cover artificial intelligence (AI) systems and AI models, including certain products that make use of AI technologies. Furthermore, the regulation categorises certain AI systems as high-risk AI systems, for which there are additional requirements such as CE marking, and Declaration of Conformity.

In this guide, we explain the regulation’s requirements and how they affect providers and other economic operators involved in AI technologies, including manufacturers of products that use AI systems.

Content Overview

FREE CONSULTATION CALL (30 MIN)

Ask questions about compliance requirements

Ask questions about compliance requirements Countries/markets:

Countries/markets:

Learn how we can help your business

Learn how we can help your business

You will speak with:Ivan Malloci or John Vinod Khiatani

What is the AI Act?

The AI Act is a regulation that provides a legal framework for regulating the use of AI systems, AI models and certain products in the EU. The regulation aims to protect consumers and society against the harmful effects of AI systems and support innovation.

Article 1(1): The purpose of this Regulation is to improve the functioning of the internal market and promote the uptake of human-centric and trustworthy artificial intelligence (AI), while ensuring a high level of protection of health, safety, fundamental rights enshrined in the Charter, including democracy, the rule of law and environmental protection, against the harmful effects of AI systems in the Union and supporting innovation.

The regulation sets the following types of provisions:

a. Rules for selling, servicing, or usage of AI systems

b. Prohibited AI practices

c. Rules for high-risk AI systems and their operators

d. Transparency rules for certain AI systems

e. Rules affecting general-purpose AI models

f. Rules on enforcement

g. Measures relating to supporting innovation

What does the AI Act cover?

The regulation covers AI systems and general-purpose AI models. The regulation also contains specific provisions relating to a certain class of AI systems called “high-risk AI systems”. More information about this type of system and the requirements affecting it is provided in the sections below.

Article 1(2): This Regulation lays down:

(a) harmonised rules for the placing on the market, the putting into service, and the use of AI systems in the Union;

(b) prohibitions of certain AI practices;

(c) specific requirements for high-risk AI systems and obligations for operators of such systems;

(d) harmonised transparency rules for certain AI systems;

(e) harmonised rules for the placing on the market of general-purpose AI models;

(f) rules on market monitoring, market surveillance, governance and enforcement;

(g) measures to support innovation, focusing on SMEs, including start-ups.

Additionally, according to Article 2, the regulation applies to:

a. Providers and deployers of AI systems or general-purpose AI models

b. Importers and distributors of AI systems

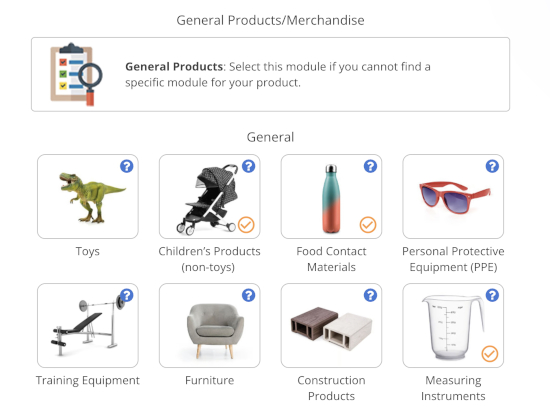

c. Manufacturers of products that:

- Make use of AI systems in their safety components, or

- Are AI systems that are themselves products covered by the regulations listed in Annex I (e.g. the Toy Safety Directive)

Note that the definitions of provider and other operators can be found in Article 3. For example, a “provider” is defined as a company or other body that either:

a. Develops an AI system or a general-purpose AI model, or

b. Has an AI system or a general-purpose AI model developed and sell it or provide it for free under its own name

Also, according to Article 25, a product manufacturer should be considered as the provider if it sells an high-risk AI system under its own trademark.

What is an AI system?

The AI Act defines an AI system as a machine-based system that has the following characteristics:

a. It is designed to operate with varying levels of autonomy

b. It may show adaptiveness after deployment

c. It can figure out how to generate outputs such as predictions, content, recommendations, or decisions that can influence physical or virtual environments

What is a high-risk AI system?

The regulation classifies certain AI systems as “high-risk AI systems” and contains specific requirements affecting them. An AI system is considered to be high-risk if it meets both of the following conditions:

a. The AI system is used as a safety component of a product or is itself a product covered by the EU regulations listed in Annex I

b. The applicable EU regulations listed in Annex I require the product to undergo a third-party conformity assessment (that is, notified body involvement is necessary).

Here are some examples of regulations that are listed in Annex I:

- Machinery Directive

- Toy Safety Directive

- Radio Equipment Directive

- Personal Protective Equipment Regulation

- Gas Appliances Regulation

- Medical Devices Regulation

Additionally, the regulation classifies AI systems as high-risk if they are used in the areas listed in Annex III, such as:

- Biometrics

- Critical infrastructure

- Education and vocational training

- Employment, workers’ management and access to self-employment

- Access to and enjoyment of essential private services and essential public services and benefits

- Law enforcement

- Migration, asylum and border control management

- Administration of justice and democratic processes

What is a general-purpose AI model?

The regulation defines a general-purpose AI model as an AI model that:

- Displays significant generality

- Is capable of competently performing a wide range of distinct tasks

- Can be integrated into a variety of downstream systems or applications

This would include, for example, large generative AI models that can generate content in the form of text, audio, images or video.

Chapter V of the regulation sets requirements for providers of general-purpose AI models, including additional requirements for general-purpose AI models with systemic risks.

General-purpose AI models with systemic risks are AI models that have high-impact capabilities as evaluated according to the requirements contained in Article 51 of the Regulation. These criteria include, for example, a certain amount of computation threshold.

Exemptions

Exemptions can be found in Article 2, and include the following:

a. Areas outside the scope of EU law

b. AI systems used exclusively for military, defence or national security purposes

c. Certain AI systems used by public authorities in a third country or international organisations

d. AI systems or AI models used for the sole purpose of scientific research and development

e. Certain EU laws on personal data and privacy

f. Any research, testing or development activity regarding AI systems or AI models before they are sold or put into service

g. To people using AI systems for purely personal and non-professional activities

h. Certain AI systems released under free and open-source licenses

What practices are prohibited for AI systems?

The regulation prohibits certain uses of AI systems. Here are some examples of the prohibited practices taken from Article 5:

a. AI systems that deploy subliminal techniques to impair a person’s ability to make an informed decision and cause significant harm.

b. AI systems that exploit any of the vulnerabilities of a natural person or a specific group of persons (e.g. due to their age) to manipulate their behaviour and cause significant harm.

c. AI systems that create facial recognition databases via the random scraping of facial imagery from the internet or CCTV.

What are the requirements for high-risk AI systems?

In this section, we present the key requirements relating to high-risk AI systems.

Standards

Harmonised standards, in general, allow you to comply with the technical requirements set by a regulation. However, we could not find any published harmonised standards under the regulation. The reason for this could be because it is a new legislation.

Note that where no harmonised standards are applied, it must be explained in the Quality Management System documentation how you ensure that the AI system complies with the regulation’s requirements.

You could, for example, use non-harmonised standards. We did find a list of non-harmonised standards on CEN-Cenelec relating to Artificial Intelligence:

a. EN ISO/IEC 23053 – Framework for Artificial Intelligence (AI) Systems Using Machine Learning (ML)

b. EN ISO/IEC 23894 – Information technology – Artificial intelligence – Guidance on risk management

c. EN ISO/IEC 8183 – Information technology – Artificial intelligence – Data life cycle framework

Declaration of Conformity

Providers must create a Declaration of Conformity that is machine-readable and that is physically or electronically signed for each high-risk AI system. The Declaration of Conformity must contain the information set out in Annex V.

The Declaration of Conformity should be translated into languages that are easily understood by the relevant authorities of member states in which their high-risk AI system is made available.

Technical documentation

Providers of high-risk AI systems must arrange technical documentation. It is used to assess the compliance of the AI system with the relevant requirements of the regulation.

It must contain the information contained in Annex IV of the regulation. The regulation states that for SMEs and start-ups, a simplified version of the technical documentation can be provided. However, we could not find any further information on this.

Labelling requirements

The CE marking must be affixed to the high-risk AI system, where possible. Where appropriate, the CE marking must be affixed to the packaging or accompanying documentation.

For AI systems provided digitally, a digital CE marking must be used if the marking can be easily accessed through:

- An interface

- Machine-readable code

- Other electronic means

If a notified body is responsible for the conformity assessment of the AI system, the CE marking must be followed by the notified body’s identification number. Further, the number should be indicated in any promotional material that asserts the AI system’s fulfilment of CE marking requirements.

Additionally, the following traceability information must appear on the high-risk AI system, its packaging or accompanying documentation:

- Provider’s name, registered trade name or registered trade mark

- Provider’s contact address

Quality management system

Providers of high-risk AI systems must establish a quality management system to ensure compliance with the regulation. The system must be documented in the form of:

- Written policies

- Procedures

- Instructions

The regulation contains a long list of content that the documentation must include. Here are some examples of information that must be included in the quality management system documentation:

a. The regulatory compliance strategy

b. Examination, test and validation procedures

c. Information on the application of technical specifications and standards

d. Information on the establishment and operation of a post-marketing monitoring system

e. Serious incident reporting procedures

Other requirements

The regulation contains many other requirements that affect high-risk AI systems. Below are some additional requirements that we found in the regulation.

| Requirement | Description |

| Conformity assessment procedures | Depending on type of high-risk AI system, the regulation will prescribe a procedure which the provider should follow to demonstrate that the requirements of the regulation have been fulfilled.

Details about the conformity assessment procedures are listed in Article 43. Note that some procedures involve engaging a notified body. |

| Risk management system | There must be a risk management system to:

a. Identify risks posed by the high-risk AI systems to health, safety and fundamental rights b. Adopt measures to address the identified risks |

| Data and data governance | High-risk AI systems that employ techniques involving the training of AI models with data, must be developed based on training, validation and testing data sets that meet the quality criteria in Article 10.

The data sets must follow the applicable data governance and management practices related to the intended purpose of the AI system. |

| Record-keeping | The high-risk AI system must allow for the automatic recording of events throughout its lifetime.

The providers of the AI system must keep the generated logs for a period appropriate to the intended purpose of the AI system. Additionally, providers should also keep documentation relating to:

|

| Transparency requirements | The high-risk AI system must be sufficiently transparent to allow deployers to understand a system’s output and appropriately use it. |

| Instructions for use | The high-risk AI system must come with user instructions in a digital format or some other format that is accessible to the deployer.

Content requirements are contained in Article 13 of the regulation. |

| Oversight measures | The high-risk AI system must be designed so that it can be effectively overseen by people.

Human oversight is required to prevent or reduce risks relating to the use of the AI system. Details of the oversight measures are found in Article 14 of the regulation. |

| Accuracy, robustness and cybersecurity | High-risk AI systems must achieve an appropriate level of accuracy, robustness, and cybersecurity. They must perform consistently throughout their lifecycle. |

| Accessibility requirements | The high-risk AI system must be accessible to the extent that it complies with:

a. Directive (EU) 2016/2102 on accessibility to websites and mobile applications b. Directive (EU) 2019/882 on accessibility requirements for products and services (e.g. e-readers) |

| Authorised representatives | Providers of high-risk AI systems who are established in third countries must appoint by written mandate an EU authorised representative. |

| Registration | Before selling or putting in service a high-risk AI system which is listed in Annex III (except for those in point 2 of the Annex), the provider or his authorised representative must first register on a public EU database.

However, high-risk AI systems listed in point 2 of Annex III must be registered at the national level. We could not find any evidence that such a database exists yet. This might be because the regulation is a new regulation. |

| Post-market monitoring requirements | Providers must have a post-market monitoring system and plan in place.

This is to allow the provider to evaluate whether the AI system continues to comply with the requirements of the regulation. |

What are the requirements for general-purpose AI models?

The regulation contains several requirements for general-purpose AI models. Here are some examples of requirements affecting providers of such models:

a. Providers must draw up and keep the updated version of technical documentation as required in Annex XI for the AI model

b. Providers must create and make available information and documentation as required in Annex XII to providers of AI systems using the AI model

c. Providers must establish a policy on EU copyright and related laws

A full list of requirements can be found in Article 53 of the regulation.

The regulation contains additional requirements for providers of general-purpose AI models with systemic risks. Here are some examples of requirements affecting providers of such AI models:

a. Perform model evaluation following standardised protocols according to the state of the art.

b. Assess and mitigate possible systemic risks at the Union level

c. Ensure an adequate level of cybersecurity protection for the AI model

A full list of requirements can be found in Article 55 of the regulation.

Product testing

Providers of AI systems and models must comply with the technical requirements of the regulation (like accuracy, robustness and cybersecurity requirements).

This can be done, for example, by using non-harmonised standards that set relevant test methods and procedures, or other methods. It is also possible that harmonised standards will be available soon.

We could not find any lab testing companies that explicitly mentioned that they could test against the requirements of the regulation. However, we did find a company that offers testing solutions to validate the performance of AI software: TestDevLab, headquartered in Riga, Latvia.

TestDevLab claims to offer the following services:

- Functional and performance testing

- Usability testing

- Security testing

- Data validation

- Model evaluation

- AI behaviour explanation

- Robustness validation

Note we can not confirm whether the services offered are sufficient to satisfy the requirements of the regulation.

FAQ

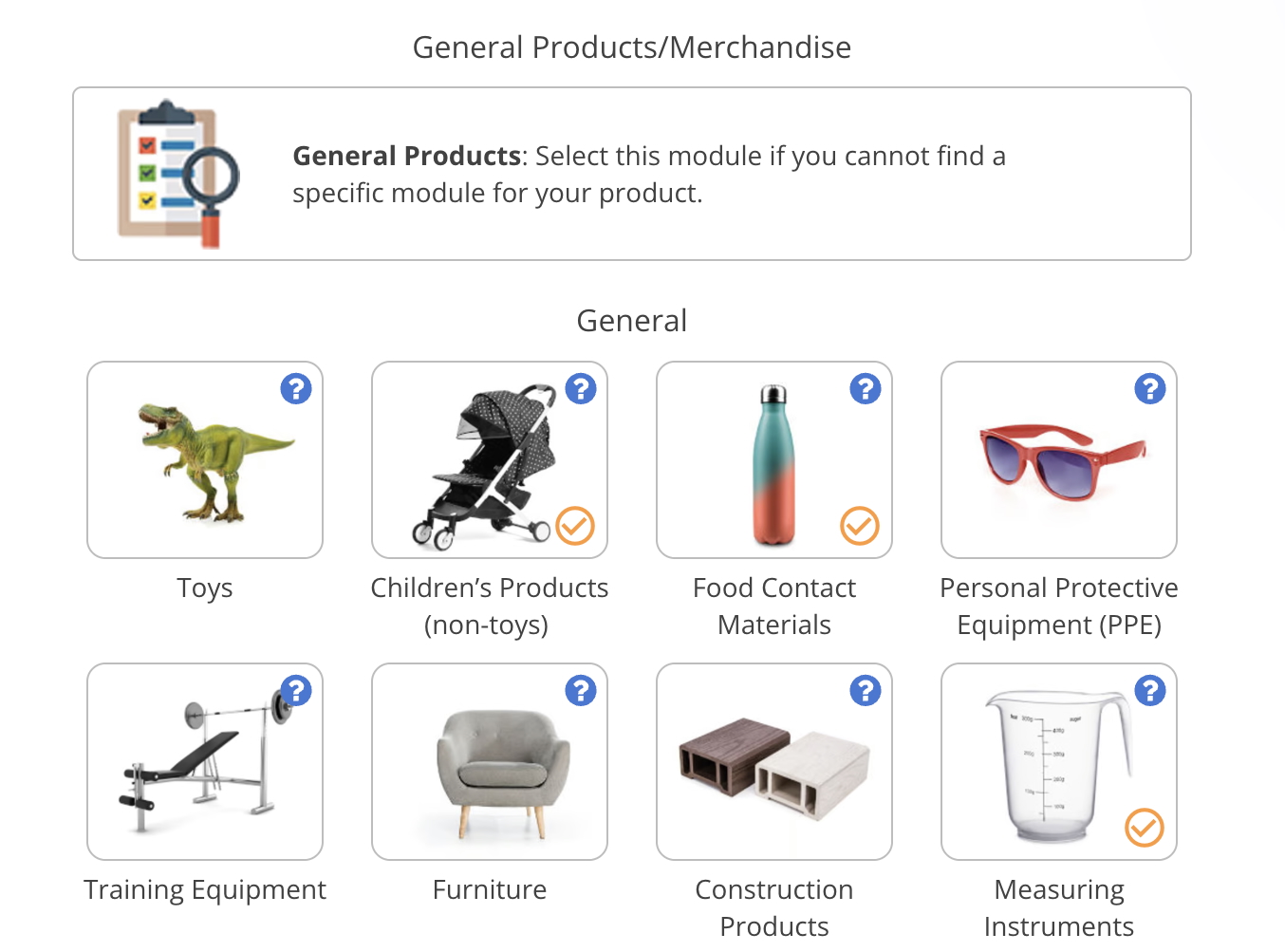

Are consumer products covered by the AI Act?

Yes, physical consumer products that use AI systems are covered. For example, the regulation contains requirements for certain personal protective equipment, electronics, and toy products which make use of AI systems.

How do I know whether an AI system is a high-risk AI system?

An AI system is a high-risk AI system if it either:

a. Falls under one of the areas mentioned in Annex III of the regulation (e.g. like remote biometric identification systems).

b. Is intended to be used as a safety component of a product or is a product covered by the regulations or directives listed in Annex I (e.g. the Toy Safety Directive) and is required to undergo their third-party assessment procedure.

Are EU importers required to comply with the AI Act?

Yes, the regulation contains requirements affecting importers of certain AI systems. For instance, importers of high-risk AI systems have to follow the requirements contained in Article 23, such as the following:

a. Ensure that the relevant conformity assessment procedure was carried out

b. Checking whether the provider of the AI system has drawn up the technical documentation

c. Confirming whether the AI systems come with the CE marking

d. Checking whether the non-EU provider has appointed an authorised representative

Additionally, product manufacturers that sell high-risk AI systems under their own trademark are considered providers and thus must comply with all the obligations for providers, such as creating a Declaration of Conformity or technical documentation. This would, most likely, also include companies that import products from outside the EU and sells them under their own brand.

.png)

.png)

.png)